以下是科研实习第二周的进度

周前问题

- 核心问题:编译时自适应硬件 or 运行时自适应硬件

psc-code/psc 实现思路

我们首先分析一下psc的代码实现,从CMakeLists.txt出发:

# file: root/CMakeLists.txt

set(PSC_GPU "host" CACHE STRING "Build with GPU supoort (host/cuda/hip)")

set_property(CACHE PSC_GPU PROPERTY STRINGS "host;cuda;hip")

option(USE_VPIC "Interface with VPIC" OFF)

option(USE_GTEST_DISCOVER_TESTS "Run tests to discover contained googletest cases" OFF)

psc_option(ADIOS2 "Build with adios2 support" AUTO)

option(PSC_USE_NVTX "Build with NVTX support" OFF)

option(PSC_USE_RMM "Build with RMM memory manager support" OFF)

option(PSC_BOUNDS_CHECK "Turn on bounds-checking" OFF)

option(PSC_USE_PERFETTO "Turn on perfetto-based tracing" OFF)

option(USE_CUDA "Build CUDA components" OFF)

由CMakeLists.txt可知,psc-code/psc的实现显然是通过在编译期传递环境变量来进行选择性的编译。

接下来看看psc-code/psc在不同设备上运行的实现如何:

首先我们还是先看看psc-code/psc是怎么实现ROCm/CUDA的分离的:

# file: root/CMakeLists.txt

if(PSC_GPU STREQUAL "cuda")

# This is needed on Summit when not using modules (in a spack build) to

# make sure that nvcc uses the same host compiler that was otherwise

# specified

set(CMAKE_CUDA_HOST_COMPILER "${CMAKE_CXX_COMPILER}")

enable_language(CUDA)

find_package(Thrust 1.10.0 REQUIRED)

thrust_create_target(Thrust)

set(USE_CUDA ON)

elseif(PSC_GPU STREQUAL "hip")

set(USE_CUDA ON)

else()

set(USE_CUDA OFF)

endif()

能够看到,在psc-code/psc中使用ROCm-HIP来进行ROCm设备上的计算加速,ROCm-HIP提供了一套完整的CUDA兼容API,从而保证ROCm/CUDA使用同一套代码即可兼容。

注意:ROCm-HIP在深度学习方向无法与CUDA兼容,需要使用ROCm原生方案

注意:ROCm自带Thrust库,无需额外link

然后我们看看在代码中,psc-config/psc的实现,我们取其中一个文件为例

#file root/src/include/psc.hxx

template <typename PscConfig, typename Diagnostics, typename InjectParticles>

struct Psc

{

using Mparticles = typename PscConfig::Mparticles;

using MfieldsState = typename PscConfig::MfieldsState;

using Balance = typename PscConfig::Balance;

using Sort = typename PscConfig::Sort;

using Collision = typename PscConfig::Collision;

using Checks = typename PscConfig::Checks;

using Marder = typename PscConfig::Marder;

using PushParticles = typename PscConfig::PushParticles;

using PushFields = typename PscConfig::PushFields;

using Bnd = typename PscConfig::Bnd;

using BndFields = typename PscConfig::BndFields;

using BndParticles = typename PscConfig::BndParticles;

using Dim = typename PscConfig::Dim;

#ifdef VPIC

using AccumulateOps = typename PushParticles::AccumulateOps;

#endif

// ----------------------------------------------------------------------

// ctor

Psc(const PscParams& params, Grid_t& grid, MfieldsState& mflds,

Mparticles& mprts, Balance& balance, Collision& collision, Checks& checks,

Marder& marder, Diagnostics& diagnostics,

InjectParticles& inject_particles)

: p_{params},

grid_{&grid},

mflds_{mflds},

mprts_{mprts},

balance_{balance},

collision_{collision},

checks_{checks},

marder_{marder},

bndp_{grid},

diagnostics_{diagnostics},

inject_particles_{inject_particles},

checkpointing_{params.write_checkpoint_every_step}

{

time_start_ = MPI_Wtime();

assert(grid.isInvar(0) == Dim::InvarX::value);

assert(grid.isInvar(1) == Dim::InvarY::value);

assert(grid.isInvar(2) == Dim::InvarZ::value);

int rank;

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

log_.open("mem-" + std::to_string(rank) + ".log");

#ifdef USE_CUDA

mem_stats_csv_header(log_);

#endif

initialize_stats();

initialize();

}

}

主要思路是在CPU运行为主体的情况下,在需要GPU的地方进行插入GPU专用的代码。

运行期可行的思路

WebGPU标准

WebGPU API 使 web 开发人员能够使用底层系统的 GPU(图形处理器)进行高性能计算并绘制可在浏览器中渲染的复杂图形。

WebGPU 是 WebGL 的继任者,为现代 GPU 提供更好的兼容、支持更通用的 GPU 计算、更快的操作以及能够访问到更高级的 GPU 特性。

WebGPU 将物理 GPU 硬件视为 GPU 适配器(GPUAdapters)。它通过 GPU 设备(GPUDevice)提供与适配器的连接,GPU 设备管理资源,并通过设备的 GPU 队列(GPUQueues)执行命令。GPU 设备可能有自己的内存,可以高速访问处理单元。GPU 缓冲区(GPUBuffer)和 GPU 纹理(GPUTexture)是由 GPU 内存支持的物理资源。GPU 命令缓冲区(GPUCommandBuffer)和 GPU 渲染包(GPURenderBundle)是用户记录命令的容器。GPU 着色器模块(GPUShaderModule)包含着色器代码。其他资源,例如 GPU 采样器(GPUSampler)或 GPU 绑定组(GPUBindGroup),配置 GPU 使用物理资源的方式。

WebGPU本地实现

注意:wgpu-native是wgpu.rs的C++调用

2024-07-26 补充

是AnswerDotAI提供的底层WebGPU接口

这个思路我认为是可以使用的最优的方案

WGSL

WGSL是运行在WebGPU上的着色器语言,下面是一个简单的Matrix乘法并行化

struct Matrix {

data : array<array<f32, 4>, 4>;

};

@group(0) @binding(0) var<storage, read> A : Matrix;

@group(0) @binding(1) var<storage, read> B : Matrix;

@group(0) @binding(2) var<storage, read_write> C : Matrix;

@compute @workgroup_size(1, 1, 1)

fn main(@builtin(global_invocation_id) global_id : vec3<u32>) {

let x = global_id.x;

let y = global_id.y;

var sum = 0.0;

for (var k = 0u; k < 4u; k = k + 1u) {

sum = sum + A.data[y][k] * B.data[k][x];

}

C.data[y][x] = sum;

}

下面是在C++中使用Google Dawn调用WGSL加速计算的代码样例

wgpu::Device InitializeDawnDevice() {

wgpu::Device device;

return device;

}

void CreateMatrixMultiplicationPipeline(wgpu::Device device, wgpu::ComputePipeline& pipeline, wgpu::BindGroup& bindGroup) {

const char* shaderCode = R"(

struct Matrix {

data : array<array<f32, 4>, 4>;

};

@group(0) @binding(0) var<storage, read> A : Matrix;

@group(0) @binding(1) var<storage, read> B : Matrix;

@group(0) @binding(2) var<storage, read_write> C : Matrix;

@compute @workgroup_size(1, 1, 1)

fn main(@builtin(global_invocation_id) global_id : vec3<u32>) {

let x = global_id.x;

let y = global_id.y;

var sum = 0.0;

for (var k = 0u; k < 4u; k = k + 1u) {

sum = sum + A.data[y][k] * B.data[k][x];

}

C.data[y][x] = sum;

}

)";

wgpu::ShaderModuleDescriptor shaderModuleDescriptor{};

shaderModuleDescriptor.code = shaderCode;

wgpu::ShaderModule shaderModule = device.CreateShaderModule(&shaderModuleDescriptor);

wgpu::PipelineLayoutDescriptor pipelineLayoutDescriptor{};

wgpu::PipelineLayout pipelineLayout = device.CreatePipelineLayout(&pipelineLayoutDescriptor);

wgpu::ComputePipelineDescriptor pipelineDescriptor{};

pipelineDescriptor.layout = pipelineLayout;

pipelineDescriptor.compute.module = shaderModule;

pipelineDescriptor.compute.entryPoint = "main";

pipeline = device.CreateComputePipeline(&pipelineDescriptor);

std::vector<float> matrixA = { };

std::vector<float> matrixB = { };

std::vector<float> matrixC(16, 0);

}

int main() {

wgpu::Device device = InitializeDawnDevice();

wgpu::ComputePipeline pipeline;

wgpu::BindGroup bindGroup;

CreateMatrixMultiplicationPipeline(device, pipeline, bindGroup);

return 0;

}

但是文档少,写了三天还是没跑起来

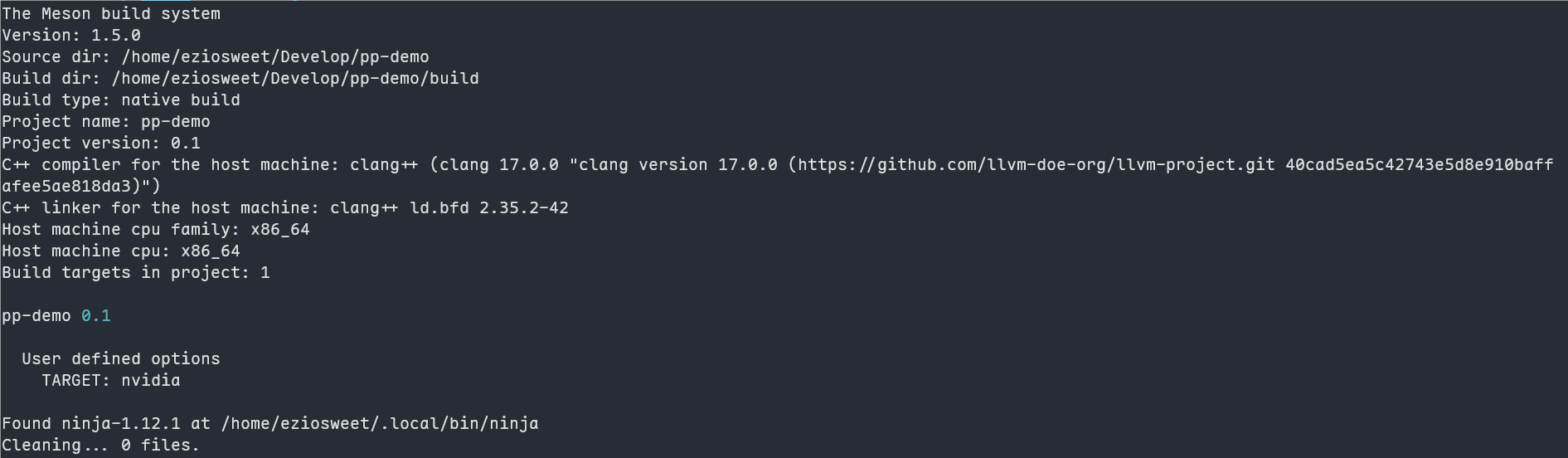

编译期实现demo

采用和psc-config/psc类似的思路,不过使用OpenACC实现

Clacc

Clacc 是一个向 Clang 和 LLVM 添加 OpenACC 支持的项目,同时提供了OpenACC的CPU支持。

通过在编译期添加命令,Clacc可以根据编译命令,自动编译到在指定的平台上

一个简单的demo

project('pp-demo', 'cpp',

version : '0.1',

default_options : ['warning_level=3'])

incl = include_directories('/home/eziosweet/.local/include')

target = get_option('TARGET')

if target == 'host'

exe = executable('pp-demo', 'src/acc.cpp',include_directories: incl,cpp_args: ['-fopenacc','-Wno-openacc-and-cxx'])

elif target == 'nvidia'

exe = executable('pp-demo', 'src/acc.cpp',include_directories: incl,cpp_args: ['-fopenacc','-fopenmp-targets=nvptx64-nvidia-cuda','-Wno-openacc-and-cxx'])

else

exe = executable('pp-demo', 'src/acc.cpp',include_directories: incl,cpp_args:['-fopenacc','-fopenmp-targets=amdgcn-amd-amdhsa','-Wno-openacc-and-cxx'])

endif

test('basic', exe)

有:

#include <iostream>

#include <openacc.h>

using namespace std;

int main() {

int i = 0;

#pragma acc parallel

{

#pragma acc loop

for (i = 0; i < 16; i++) {

printf("i=%d\n",i);

}

}

return 0;

}